Preface: In the 1.5.2 release we’ve implemented a couple of fixes regarding issues related to SPARQL’s bottom-up evaluation approach and associated variable scoping problems. If you encounter regressions with some of your queries after upgrading to 1.5.2, this blog post may help you identify ill-designed queries that are not in line with the official SPARQL 1.1 semantics. Please consult our Wiki for a more comprehensive discussion of SPARQL’s bottom-up semantics.

In one of our recent posts titled In SPARQL, Order Matters we discussed implications of SPARQL’s “as written” evaluation semantics. Another tricky aspect in SPARQL is it’s bottom-up evaluation semantics. Informally speaking, bottom-up evaluation means that subqueries and nested groups are (logically) evaluated first. As a consequence, actual evaluation order chosen by SPARQL engines must yield the same results as bottom-up evaluation order in order to be valid.

Blazegraph does not actually use bottom-up evaluation normally. Instead, Blazegraph prefers to reorder joins and join groups in order to reduce the amount of data read and the size of the intermediate solutions flowing through the query using what is known as left-deep evaluation. However, some queries can only be evaluated by bottom-up plans. Bottom-up plans are often much less efficient. However, bottom-up evaluation can be required if some variables are not visible in some intermediate scopes such that more efficient left-deep plans can not be used.

This guide will help you understand why, how to recognize when bottom-up evaluation semantics are being used and what you can do to avoid the problem and get more performance out of the your SPARQL queries. It also sketches Blazegraph’s built-in optimization techniques for efficiently dealing with issues induced by SPARQL’s bottom-up semantics.

Illustrating the Problem by Example

Let’s start out with a very simple dataset that we will use throughout the upcoming examples:

<http://example.com/Alice> a <http://example.com/Person> .

<http://example.com/Flipper> a <http://example.com/Animal> .

This is, we have two triples, one stating the Alice is a person and the other one stating that Flipper is an Animal. Let’s start out with a simple query to illustrate what bottom-up evaluation actually means and which problems it can generate:

SELECT ?s ?personType WHERE {

BIND(<http://example.com/Person> AS ?personType)

{

?s a ?o

FILTER(?o=?personType)

}

}

The query aims to extract all instances of type <http://example.com/Person>. To this end, variable ?personType is bound to the URI <http://example.com/Person> using the BIND keyword in the first line, the triple pattern “?s a ?o” searches for all typed instances, and the filter retains those that coincide with the actual binding of ?personType. Looks reasonable, doesn’t it? But there is a “gotcha”. The ?personType variable will not be bound when the inner basic graph group pattern is evaluated! We will explain why and what to do about this below.

Let’s see what happens according to SPARQL bottom-up semantics. Generally speaking, bottom-up evaluation means that, from a logical point of view, we start with evaluating the “leaf nodes” of the query tree first, using these results to iteratively evaluating composed nodes at higher levels. Considering our query above, one node in the query tree is the ({}-enclosed) subgroup consisting of the statement pattern and the filter. Here’s what happens when evaluating this node:

1. We evaluate the triple pattern “?s a ?o” against the data, which gives us two intermediate result mappings, namely

{ ?s -> <http://example.com/Alice>, ?o -> <http://example.com/Person> },

{ ?s -> <http://example.com/Flipper>, ?o -> <http://example.com/Animal> }

2. Next, we apply the FILTER (?o=?type) over the two mappings from 2a.

And here’s the problem: our two intermediate result mappings do not contain a binding for variable ?type (because the latter has not yet been bound when starting evaluation bottom-up). In such cases, the SPARQL semantic defines that the FILTER condition evaluates to an “error”, in which case the FILTER rejects all mappings. We thus end up with the empty result for the subgroup, and it is easy to see that, as a consequence, the whole query gives us the empty result.

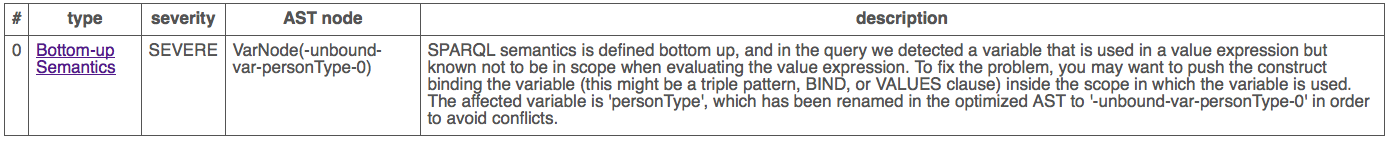

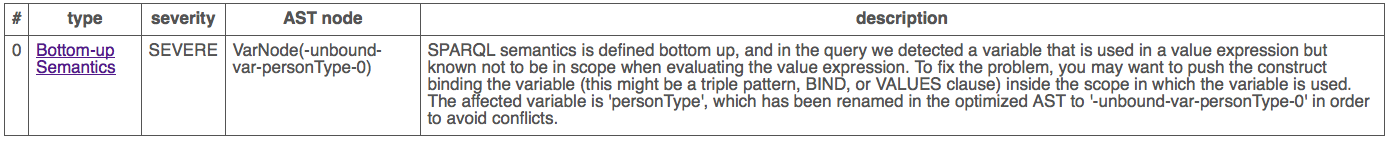

SPARQL has a lot of great features, but this is not one of them. To help make people’s lives easier we are extending Blazegraph’s EXPLAIN feature to also report problems such as this in your queries (see Figure below). This will be part of the next Blazegraph release.

Other Problematic Cases

Well, you now may argue that the query above is unnecessarily complex and that it is somewhat silly to put the triple patterns in a dedicated subgroup, and that no one came ever up with such a query. And (with the exception of the Blog author) you’re probably right, but look at the following one:

SELECT ?person ?nonPerson ?type WHERE {

BIND(<http://example.com/Person> AS ?type)

{

?person a ?o

FILTER(?o=?type)

}

UNION

{

?nonPerson a ?o

FILTER(?o!=?type)

}

}

In this UNION query, the BIND expression is used to introduce a variable binding that is intended to be reused in the two parts of the UNION: the idea is to extract all instances of type <http://example.com/Person> in the first part of the UNION, and all the others in the second part of the UNION.

But again, this query does not work as desired: the two blocks of the UNION open up new scopes, in which the ?type variable is not known. For the same reasons as in the example before, both FILTER expression evaluate to error and we end up with the empty result. One way to fix this is by (redundantly) pushing the BIND into the two parts of the UNION:

SELECT ?person ?nonPerson ?type WHERE {

{

BIND(<http://example.com/Person> AS ?type)

?person a ?o

FILTER(?o=?type)

}

UNION

{

BIND(<http://example.com/Person> AS ?type)

?nonPerson a ?o

FILTER(?o!=?type)

}

}

The latter query will give us the desired results without any performance penalty, namely:

?person | ?nonPerson | ?type

---------------------------------------------------------------------------------------

<http://example.com/Alice> | | <http://example.com/Person>

| <http://example.com/Flipper> | <http://example.com/Animal>

Other Problematic Cases: BIND and VALUES

The problem sketched above is not restricted to the usage of variables in FILTER expressions. Similar issues may arise whenever we use variables in nodes that “consume” these variable without matching them against the dataset. More concretely, this means: using a triple pattern with a variable in an inner scope is not a problem: the variables are matched against the dataset independently from the outside, and will be joined with the outside part at a later point in time. But when using SPARQL 1.1 constructs such BIND or VALUES clauses, you may run into the same problems as sketched before by means of the FILTER expression. Look at the following query, which aims at extracting all persons (first part of the UNION) and all instances that are not persons (second part of the UNION), including the respective types:

SELECT ?s ?type WHERE {

BIND("http://example.com/" AS ?typeBase)

{

BIND(URI(CONCAT(?typeBase,"Person")) AS ?type)

?s a ?o

FILTER(?o=?type)

}

UNION

{

BIND(URI(CONCAT(?typeBase,"Animal")) AS ?type)

?s a ?o

FILTER(?o=?type)

}

}

The problem is essentially the same: we bind variable ?typeBase outside. In the inner UNION blocks, we try to bind variable ?type based on ?typeBase – but the latter is not in scope here. Hence, the query returns the empty result.

Optimizations in Blazegraph

Strictly following bottom-up semantics when implementing a query engine is typically not a good choice when it comes to evaluation performance. Top-down evaluation, where we inject mappings from previous evaluation steps into subsequent subgroups, can lead to significant speedups. The good news is that, for a broad range of SPARQL queries, bottom-up and top-down evaluation coincide. This holds, for instance, for the complete class of SPARQL queries built only from triple patterns connected through “.” (so-called conjunctive queries).

When it comes to Blazegraph, the basic evaluation approach is a top-down approach. For queries where top-down and bottom-up evaluation make a difference, Blazegraph rewrites queries in such a way that their top-down evaluation result coincides with the bottom-up result, where possible (wherever this is not possible, it essentially switches to bottom-up evaluation for that part of the query). There are various techniques and tricks that are implemented in Blazegraph’s optimizer for this purpose: in many cases, subgroups can just be flattened out without changing semantics, variables in subgroups that are known to be unbound can be renamed to avoid clashes, etc. With the fixes in 1.5.2 we’ve ruled out various inconsistencies between Blazegraph and the official W3C spec. If you plan to migrate from previous versions to 1.5.2 or later, we recommend you reading our extended discussion on bottom-up semantics in our Wiki.

Summary

Although some of the examples above were somewhat artificially designed to illustrate the issues arising in the context of SPARQL’s bottom-up semantics by means of simplistic examples, we have observed ill-designed queries of this style in practice in both our own applications and in customer applications. We hope that the examples and guidelines in this post help our readers and users to avoid the common pitfalls and write better, standard-compliance SPARQL in the future.

We’d love to hear from you.

Did you make similar experiences with SPARQL’s semantics? Or do you have a cool new application using Blazegraph or are interested in understanding how to make Blazegraph work best for your application? Get in touch or send us an email at blazegraph at blazegraph.com.

![]() We’re very pleased to announce the the release of Blazegraph 2.1.4. This is a maintenance release of Blazegraph. See the full details here.

We’re very pleased to announce the the release of Blazegraph 2.1.4. This is a maintenance release of Blazegraph. See the full details here.

Bug Fixes for the update to Lucene 5.5.0 Version

Bug Fixes for the update to Lucene 5.5.0 Version

Github

Github Using Pubchem with Blazegraph

Using Pubchem with Blazegraph Will you be at the International Semantic Web Conference (ISWC) this year? We’re a Gold sponsor along with our partner Metaphacts and will be speaking and exhibiting. Come see

Will you be at the International Semantic Web Conference (ISWC) this year? We’re a Gold sponsor along with our partner Metaphacts and will be speaking and exhibiting. Come see